Judy Hanwen Shen

I am a PhD student in the Theory Group in the Computer Science Department at Stanford University. I am fortunate to be advised by Omer Reingold. I am currently working on human-AI collaboration and understanding the societal impacts of advanced AI.

I am an advocate for PhD student well-being. I led the most recent PhD Climate Survey in the Stanford Computer Science Department. I also write about various aspects of graduate student life in my blog. From time to time, I donate my hair and you can too!

email: jhshen [at] stanford [dot] edu

Publications

(αβ) indicates author list in alphabetical order, (*) indicates equal contribution

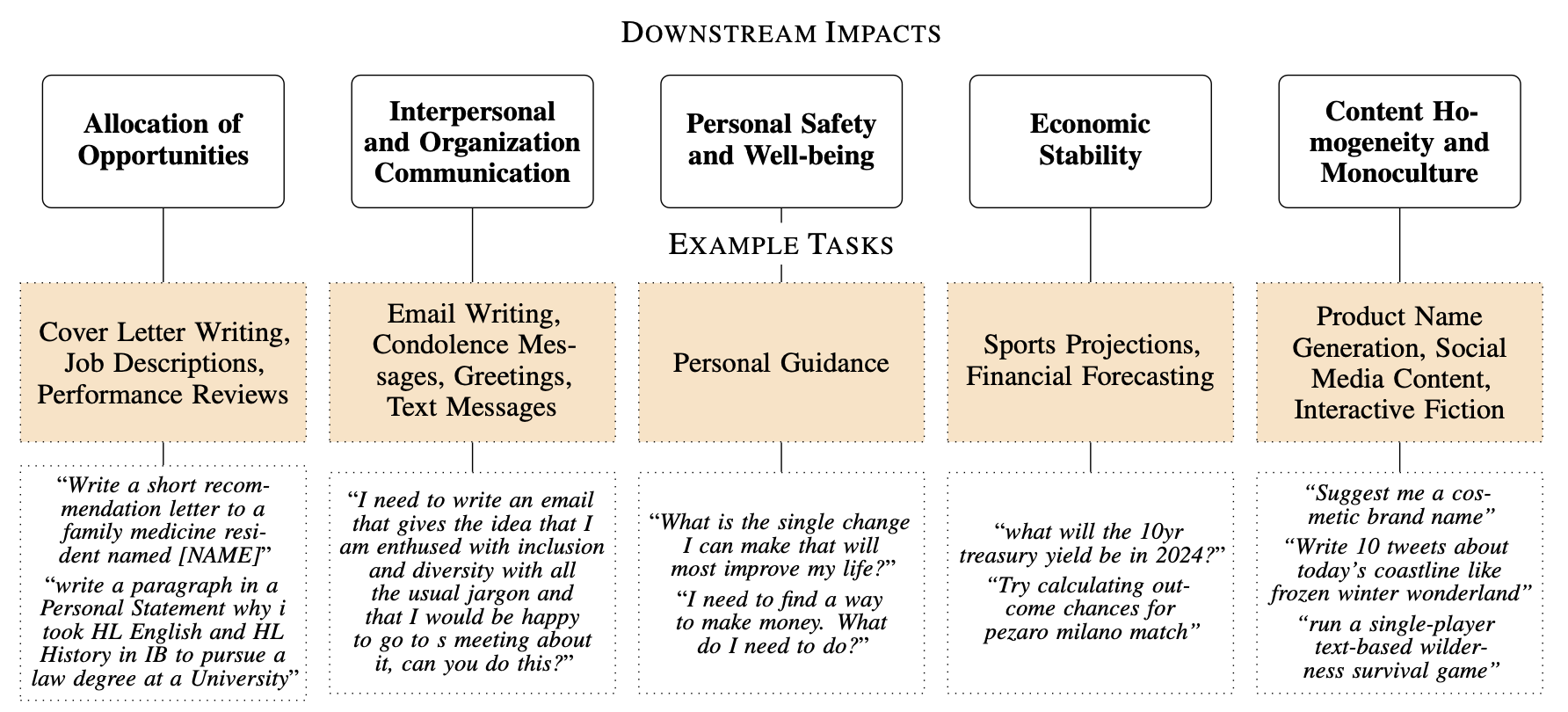

Societal Impacts Research Requires Benchmarks for Creative Composition Tasks

JH Shen and Carlos Guestrin

International Conference on Machine Learning 2025 (ICML)

BiAlign Workshop at ICLR 2025: Best Societal Impacts Paper Award 🎉

Everyday creativity tasks done by AI will impact society, we need to be better measure them.

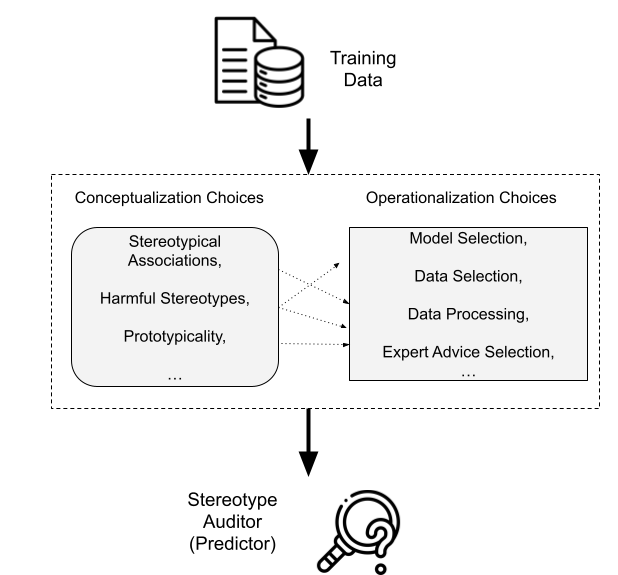

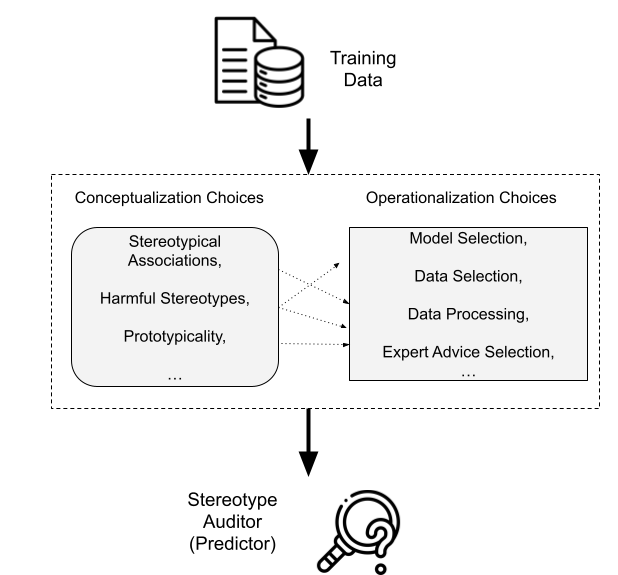

Fairness with respect to Stereotype Predictors: Impossibilities and Best Practices

with Inbal Livni Navon and Omer Reingold (αβ)

Transactions on Machine Learning Research May 2025 (TMLR)

We introduce a unified framework of "stereotype predictors" to systematically measure and mitigate representational harms in AI systems.

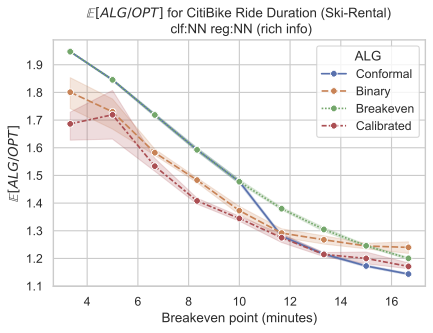

Algorithms with Calibrated Machine Learning Predictions

with Ellen Vitercik and Anders Wikum (αβ)

International Conference on Machine Learning 2025 (ICML)

ICML Spotlight 🎉

Calibrated machine learning predictions can significantly improve online algorithms by providing more reliable uncertainty estimates, with applications to ski rental and job scheduling problems.

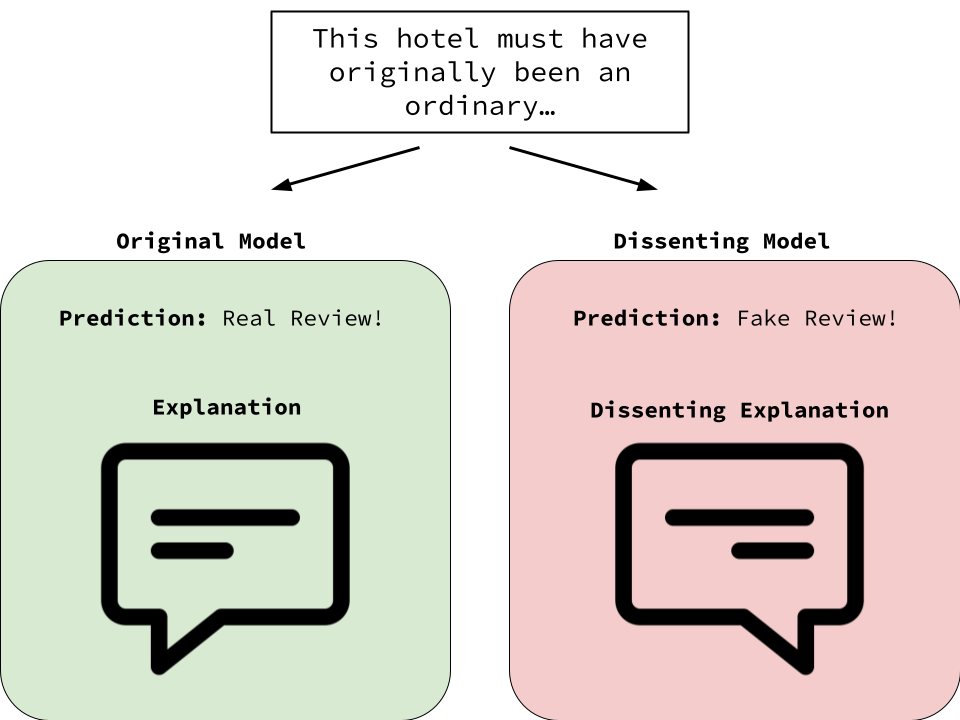

Dissenting Explanations: Leveraging Disagreement to Reduce Model Overreliance

with Omer Reingold and Aditi Talati (αβ)

AAAI Conference on Artificial Intelligence 2024 (AAAI)

When explanations can argue both for and against a model decision, humans make better decisions.

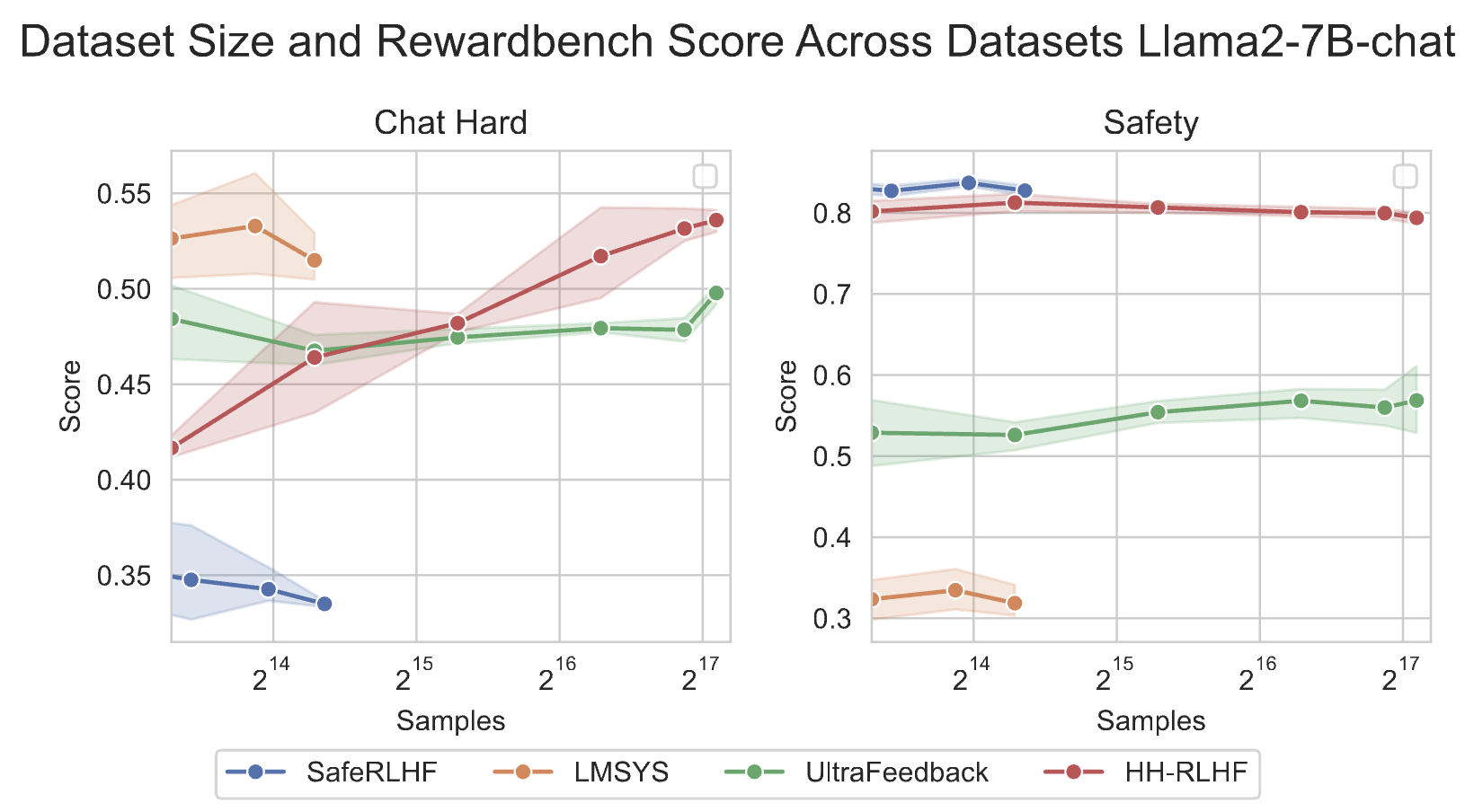

Towards Data-Centric RLHF: Simple Metrics for Preference Dataset Comparison

JH Shen, Archit Sharma, Jun Qin

Attrib Workshop @ Neurips 2024

Adding data is still not always better, even in reward modeling. We give simple metrics to compare preference datasets.

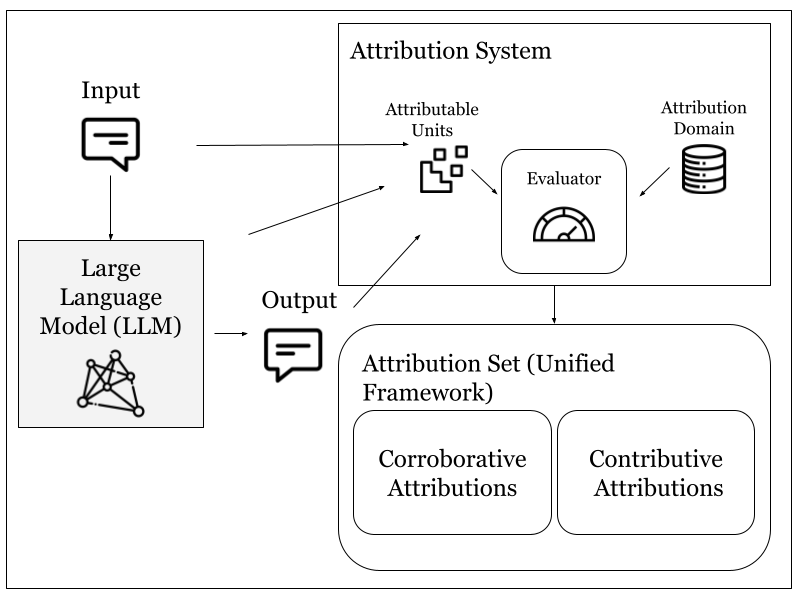

Unifying Corroborative and Contributive Attributions in Large Language Models

Teddi Worledge*, JH Shen*, Nicole Meister, Caleb Winston, Carlos Guestrin

IEEE Conference on Secure and Trustworthy Machine Learning 2024 (SaTML)

Modern LLM applications require both factuality and training data attributions, we introduce a unified model for both.

Fairness with respect to Stereotype Predictors: Impossibilities and Best Practices

with Inbal Livni Navon and Omer Reingold (αβ)

Transactions on Machine Learning Research May 2025 (TMLR)

We introduce a unified framework of "stereotype predictors" to systematically measure and mitigate representational harms in AI systems.

Societal Impacts Research Requires Benchmarks for Creative Composition Tasks

JH Shen and Carlos Guestrin

International Conference on Machine Learning 2025 (ICML)

BiAlign Workshop at ICLR 2025: Best Societal Impacts Paper Award 🎉

Everyday creativity tasks done by AI will impact society, we need to be better measure them.

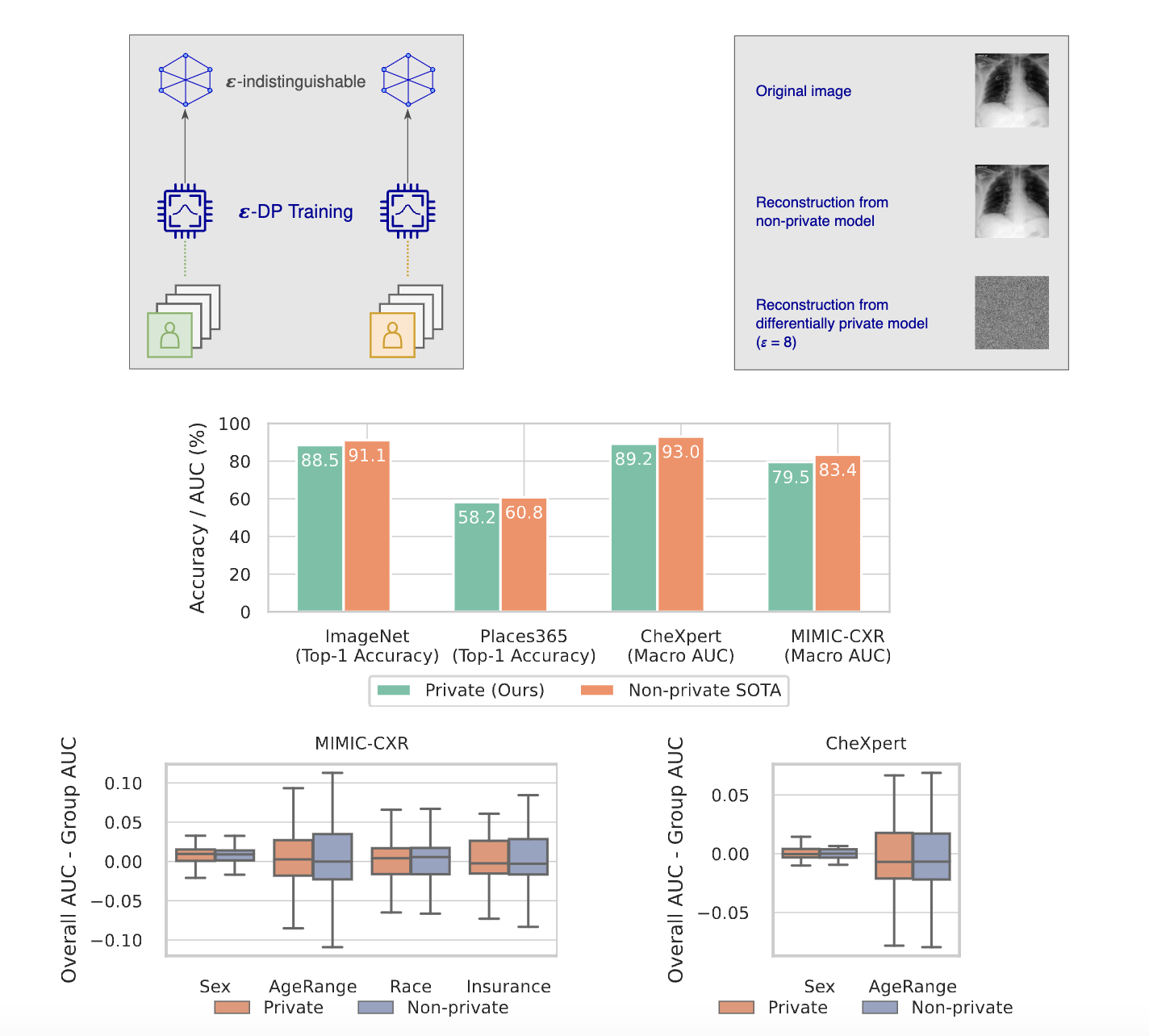

Unlocking Accuracy and Fairness in Differentially Private Image Classification

L. Berrada*, S. De*, JH Shen*, J. Hayes, R. Stanforth, D. Stutz, P. Kohli, S.L. Smith, B. Balle

Pre-trained foundation models fine-tuned with differential privacy can achieve accuracies comparable to non-private classifiers while maintaining fairness across demographic groups, making privacy-preserving machine learning practically viable for sensitive datasets.

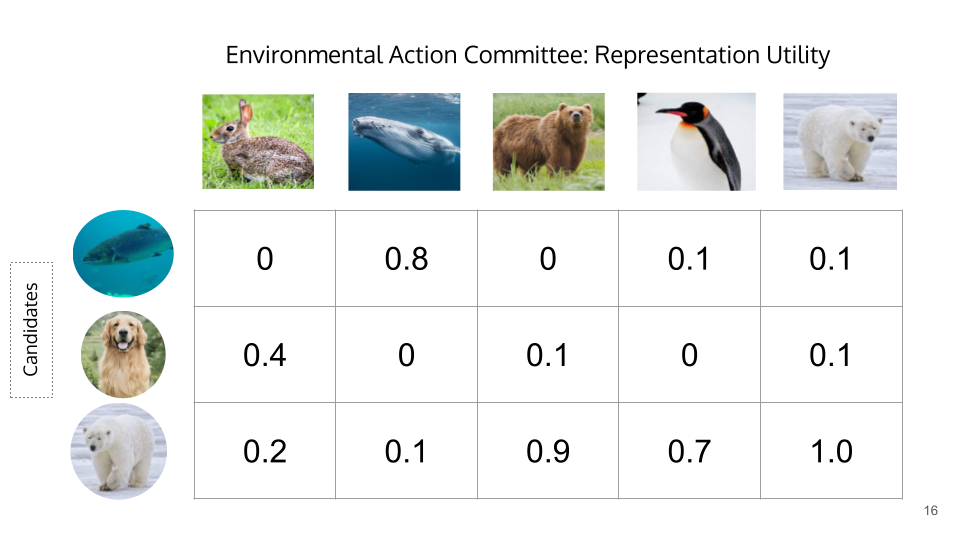

Leximax Approximations and Representative Cohort Selection

with Monika Henzinger, Charlotte Peale and Omer Reingold (αβ)

3rd annual Symposium on Foundations of Responsible Computing (FORC). 2022.

We introduce new notions of approximate leximax and give a polynomial-time algorithm for finding a representative cohort.

2025

Formalizing Fairness with respect to Stereotype Predictors

I. Navon, O. Reingold, JH. Shen (αβ)

Transactions on Machine Learning Research (TMLR) 2025.

[ talk ]

[ code ]

Algorithms with Calibrated Machine Learning Predictions

JH. Shen, E. Vitercik, A. Wikum (αβ)

International Conference on Machine Learning (ICML) 2025.

ICML Spotlight 🎉

[ code ]

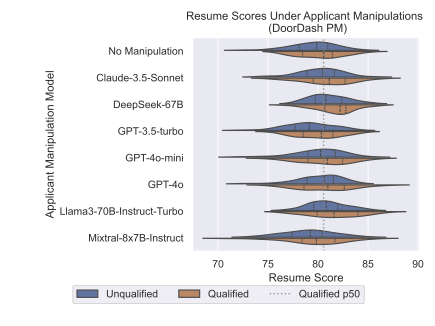

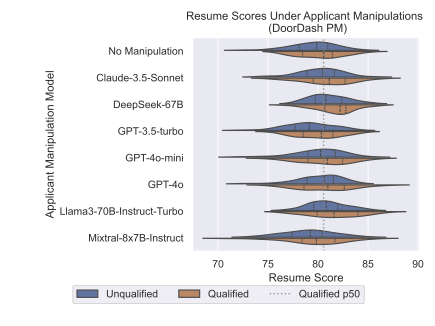

Two Tickets are Better than One: Fair and Accurate Hiring Under Strategic LLM Manipulations

L. Cohen, C. Hong, J. Hsieh, JH. Shen (αβ)

International Conference on Machine Learning (ICML) 2025.

[ code ]

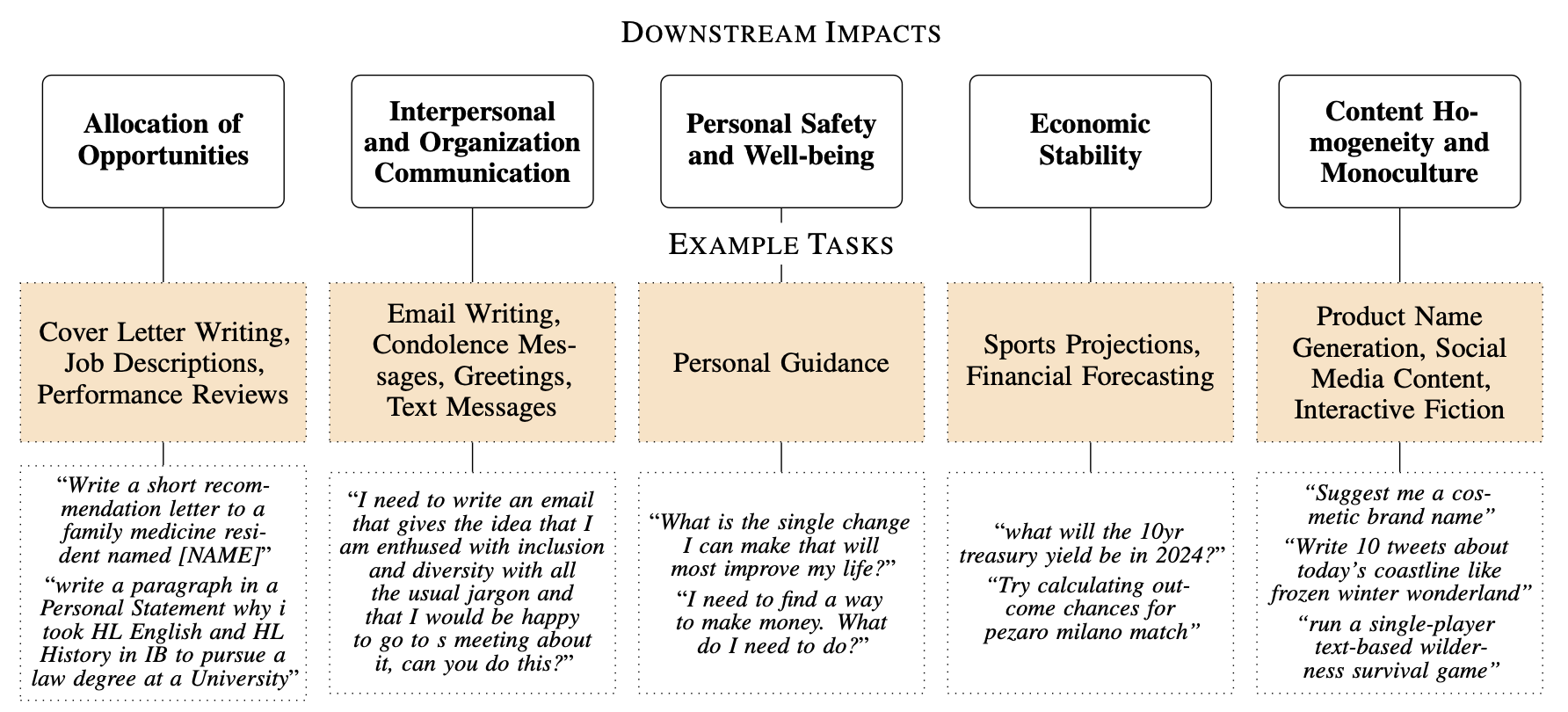

Societal Impacts Research Requires Benchmarks for Creative Composition Tasks

JH. Shen, C. Guestrin

International Conference on Machine Learning (ICML) 2025.

BiAlign Workshop at ICLR 2025: Best Societal Impacts Paper Award 🎉

[ data ]

2024

Towards Data-Centric RLHF: Simple Metrics for Preference Dataset Comparison

JH. Shen, A. Sharma, J. Qin

Attrib Workshop @ Neurips 2024

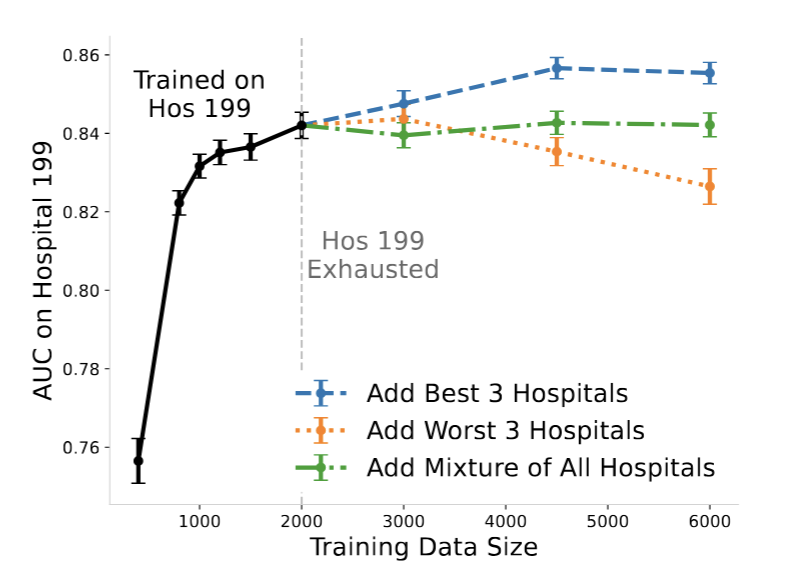

The Data Addition Dilemma

JH. Shen, ID. Raji, IY. Chen

Machine Learning For Health Care 2024 (MLHC)

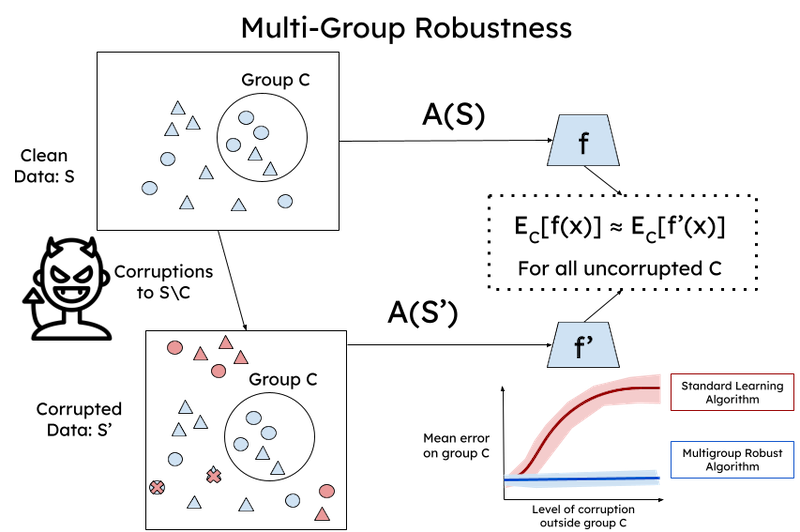

Multigroup Robustness

L. Hu, C. Peale, JH. Shen (αβ)

41st International Conference on Machine Learning (ICML 2024)

Unifying Corroborative and Contributive Attributions in Large Language Models

T. Worledge*, JH. Shen*, N. Meister, C. Winston, C. Guestrin

2nd IEEE Conference on Secure and Trustworthy Machine Learning. 2024.

Contributed talk at NeurIPS ATTRIB Workshop. 2023.

Dissenting Explanations: Leveraging Disagreement to Reduce Model Overreliance

O. Reingold, JH. Shen, A. Talati (αβ)

38th Annual Conference on Artificial Intelligence (AAAI). 2024.

2023

Unlocking Accuracy and Fairness in Differentially Private Image Classification

L. Berrada*, S. De*, JH Shen*, J. Hayes, R. Stanforth, D. Stutz, P. Kohli, S.L. Smith, B. Balle

Preprint 2023.

[ blog post ]

Bidding Strategies for Proportional Representation in Advertisement Campaigns

IL. Navon, C. Peale, O. Reingold, JH. Shen (αβ)

4th annual Symposium on Foundations of Responsible Computing (FORC). 2023.

2022

Leximax Approximations and Representative Cohort Selection

M Henzinger, C Peale, O Reingold, JH. Shen (αβ)

3rd annual Symposium on Foundations of Responsible Computing (FORC). 2022.

2021

Fast and Memory Efficient Differentially Private-SGD via JL Projections

Z. Bu, S. Gopi, J. Kulkarni, YT. Lee, JH. Shen, U. Tantipongpipat (αβ)

Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021). 2021.

Accuracy, Interpretability, and Differential Privacy via Explainable Boosting

H. Nori, R. Caruana, Z. Bu, JH. Shen, J. Kulkarni.

Thirty-fifth Conference on Neural Information Processing Systems (ICML 2021). 2021.

Differentially Private Set Union

S. Gopi, P. Gulhane, J. Kulkarni, JH. Shen, M. Shokouhi, S. Yekhanin (αβ)

Journal of Privacy and Confidentiality (JPC) 2021.

Previous version at ICML 2020.

Contributed Talk at TPDP 2020.

[ talk ]

[ code ]

2020

Human-centric dialog training via offline reinforcement learning

N. Jaques*, JH. Shen*, A. Ghandeharioun, C. Ferguson, A. Lapedriza, N. Jones, S.S. Gu, R. Picard.

Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP 2020). 2020.

Previous version: Way Off-Policy Batch Deep Reinforcement Learning of Implicit Human Preferences in Dialog

Contributed talk at NeurIPS workshop on Conversational AI 2019.

[ data ]

[ code ]

Hierarchical reinforcement learning for open-domain dialog

A. Saleh, N. Jaques, A. Ghandeharioun, JH. Shen, R. Picard.

The Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI 2020). 2020.

[ data ]

[ code ]

2019

Approximating Interactive Human Evaluation with Self-Play for Open-Domain Dialog Systems

A. Ghandeharioun*, JH. Shen*, N. Jaques*, C. Ferguson, N. Jones, A. Lapedriza, R. Picard.

33rd Conference on Neural Information Processing Systems (NeurIPS 2019). 2019.

[ data ]

[ code ]

The Popstar, the Poet, and the Grinch: Relating Artificial Intelligence to the Computational Thinking Framework with Block-based Coding

J Van Brummelen, JH Shen, EW Patton.

Proceedings of International Conference on Computational Thinking Education. 2019.

Unintentional affective priming during labeling may bias labels

JH. Shen, A. Lapedriza, R. Picard.

8th International Conference on Affective Computing and Intelligent Interaction (ACII). 2019.

2018

Darling or Babygirl? Investigating Stylistic Bias in Sentiment Analysis

JH. Shen*, L. Fratamico*, I. Rahwan, A. M. Rush.

5th Workshop on Fairness, Accountability, and Transparency in Machine Learning. 2018.

Contributed talk

Comparing Models of Associative Meaning: An Empirical Investigation of Reference in Simple Language Games

JH. Shen*, M. Hofer*, B. Felbo, R. Levy.

Proceedings of the 22nd Conference on Computational Natural Language Learning (CoNLL 2018). 2018.

Oral presentation

[ code + data ]

TuringBox: An Experimental Platform for the Evaluation of AI Systems

Z. Epstein, B.H Payne, JH. Shen, CJ. Hong, B. Felbo, A. Dubey, M. Groh, N. Obradovich, M. Cebrian & I. Rahwan.

5th Workshop on Fairness, Accountability, and Transparency in Machine Learning. 2018.

Contributed talk

Closing the AI Knowledge Gap

Z. Epstein, B.H Payne, JH. Shen, A. Dubey, B. Felbo, M Groh, N. Obradovich, M. Cebrian & I. Rahwan

Preprint. 2018.

2017

Mental Health and NLP

Detecting Anxiety through Reddit

JH. Shen. F. Rudzicz.

Proceedings of the Fourth Workshop on Computational Linguistics and Clinical Psychology–-From Linguistic Signal to Clinical Reality. 2017.

[ code ] [data: (available by request)]

Template from Bootstrapious